9.4. Limits of Parallelism and Scaling¶

While hardware support is required to achieve parallel computation, it is not

sufficient on its own. Many problems or algorithms simply do not support

parallel computation. For example, consider merge sort as shown in Code Listing

9.2. Although it is true that the left and right halves of the array

could be sorted in parallel with two threads, the merge() routine cannot be

parallelized; a single thread must traverse through both the left and right

halves to assemble the results. Consequently, there are limits to how much merge

sort can be improved with parallel execution.

9.4.1. Amdahl’s Law and Strong Scaling¶

Amdahl’s law provides a way to quantify the theoretical maximum speedup in latency (also called the speedup factor or just speedup) that can occur with parallel execution. Specifically, Amdahl’s law describes the ratio of the original execution time with the improved execution time, assuming perfect parallelism and no overhead penalty. That is, Amdahl’s law provides a theoretical limit to how much faster a program can run if it is parallelized. If $p$ denotes the percent of a program that can be executed in parallel and $N$ denotes the number of parallel execution units, Amdahl’s law states that the theoretical maximum speedup would be:

This formula can be naturally derived by taking the ratio of the original execution time and the improved execution time for the parallelized version. If we use $T_{orig}$ to denote the original execution time, then $(1 – p) T_{orig}$ would signify the portion of the execution time that must run sequentially. Assuming perfect parallelism as Amdahl’s law does, the remainder of the time would be divided across the $N$ processors. This gives us the derivation of Amdahl’s law:

By cancelling out the $T_{orig}$ values from the numerator and denominator, we are left with the formulation of Amdahl’s above. A variant of Amdahl’s law uses $f$ to denote the portion that must be run sequentially; that is, $f = 1 – p$. This leads to another derivation of Amdahl’s law. The result will be identical as the original formulation, but the calculation might be easier.

Example 9.4.1

As an example, consider a program that runs in 20 ms. The way the program is written, 20% of it must be run sequentially; the remaining 80% will be run in parallel on a quad-core. Per Amdahl’s law, the maximum theoretical speedup of this program would be:

Using the alternative derivation, we would still get the same result:

In this case, we could also determine that the parallelized version would spend 4 ms in the sequential portion of the program. The remaining portion (16 ms in the original) would be divided across the 4 cores, so the parallel portion would take 4 ms. Consequently, parallelizing the program would improve the run time from 20 ms to 8 ms, which is a speedup factor of 2.5. The advantage of Amdahl’s law, however, is that we did not need to know the original run-time. As long as we know what portion can be parallelized and how many processing units we have, we can determine the speedup factor. Amdahl’s law also emphasizes a key point about parallelism: Improving the percent of a program that can be parallelized has more impact than increasing the amount of parallelism.

Example 9.4.2

To illustrate this point, let us consider two variants on the previous scenario. In one variant, the program has been restructured so that 90% can be parallelized rather than 80%. This now leads to a speedup factor of:

In the second variant, we can still only parallelize 80% of the program, but we have increased the number of cores from four to six. This variant produces a speedup of:

In other words, increasing the percent of parallelized code by 12.5% had a bigger improvement than increasing the number of cores by 50%.

As the number of processing units continues to increase, the precise calculation of Amdahl’s law becomes less important. Specifically, we can determine a faster approximation of the speedup limit by considering the impact of an arbitrarily large number of processing units; that is, we can derive a simplified estimate by calculating the limit of $S$ as $N$ goes to infinity:

Using this simplified estimate, we can determine that the upper bound on the speedup for the program in Example 9.4.2 would be 5 (i.e., 1 / 0.2).

9.4.2. Gustafson’s Law and Weak Scaling¶

Although Amdahl’s law provides an initial estimate to quantify the speedup from parallel execution, it is important to note that it rests on unrealistic assumptions. Amdahl’s law assumes that the problem solved by the program exhibits strong scaling, meaning that the difficulty of the problem is unaffected by the number of processors involved. In perfectly strong scaling, there is no overhead penalty for creating more threads or using more processors. A program run on a system with 100 processors will run in 1/100th of the time than it would on a single-processor system. In contrast, a more realistic and common property is weak scaling, which emphasizes accomplishing more work rather than running in less time. In weak scaling, the additional processors are used to tackle bigger and more complex problems, while holding the expected run time to be the same.

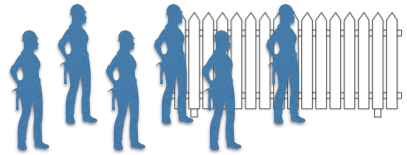

To illustrate the difference between strong and weak scaling, consider a painting business. Figure 9.4.3 illustrates the scenario where the company has been hired to paint a fence that is 20 feet in length. This job initially seems to exhibit strong scaling. If one painter could finish painting the fence in one hour, then four painters could probably finish the job in 15 minutes. However, as more painters are added, the scaling becomes weak. If the company tries to send 20 painters for the same fence, they are unlikely to finish the job in only three minutes. Rather, the fence would become too crowded and painters would have to wait on each other. A better choice for the company would be to send the additional 16 painters to paint other fences. If they work in groups of four to paint multiple 20-foot fences, the company could paint five fences in a 15-minute time period. Alternatively, the company could choose to send just one painter per fence, completing 20 fences in a single hour. The fences are not necessarily painted any faster than before, but the company is getting more work accomplished in the same amount of time.

The usefulness of Amdahl’s law is limited by its reliance on strong scaling and unrealistic assumptions of parallel execution. Specifically, Amdahl’s law deliberately ignores any performance cost associated with creating and managing threads, as well as system-specific factors such as NUMA or processor workload. Amdahl’s law is also limited by its exclusive focus on parallelism; Amdahl’s law cannot be used to predict the impact of changing the layout of data within NUMA. Gustafson’s law provides an alternative formulation for speedup that addresses these limitations.

Similar to Amdahl’s law, Gustafson’s law uses $p$ to denote the percent of the work that can benefit from an improvement of some sort. Unlike Amdahl’s law, this improvement is not tied to parallelism solely; the improvement could result from an improvement in how the OS manages threads, moving data around within a NUMA architecture, increasing the parallelism, or any other such change. The amount of the improvement [1] is denoted as $s$. Gustafson’s law then states that the maximum theoretical speedup of deploying the improvement is:

Example 9.4.3

As an example, consider a program that can be partially improved with parallel execution. Let us assume that 20% of the program cannot be improved and some initial empirical results suggest that the parallel execution portion runs in 1/5th of the time that it takes sequentially (i.e., an improvement factor of 5). Note that this does not assume anything about how many processors are used, so it can be based on more realistic measurements by running some initial tests. In this case, the speedup would be:

It is key to note that this speedup has a different meaning than the speedup described by Amdahl’s law. This speedup factor does not mean that the program runs 4.2 times as fast as the original, which is an assertion built on strong scaling. Instead, the proper interpretation of the Gustafson’s law notion of speedup is that this program can achieve 4.2 times as much work in the same amount of time, which is based on weak scaling. If the original program could process 10 MB of data in a minute, then the improved version could process 42 MB in the same amount of time. With Gustafson’s law, the emphasis is on the throughput (amount of work done) rather than a faster time.

| [1] | To be fair, Gustafson’s law can also be criticized for ignoring complicating factors such as synchronization or communication overhead. However, this objection is not as strong as it is for Amdahl’s, as the improvement factor $s$ can be based more empirically. |