7.7. Deadlock¶

The synchronization primitives described in this chapter provide flexible mechanisms to solve many problems related to the timing of concurrent threads. These mechanisms can enforce mutually exclusive access to critical sections, they can send signals of custom events, they can require a minimum number of threads reach a common point, and so on. However, there are common pitfalls and errors that can arise from their use. One problem (described in the section on locks) is making the critical section the wrong size, introducing slow performance. But that is a minor problem in comparison to deadlock.

Deadlock is the permanent and unresolvable blocking of two or more threads that results from each waiting on the other. It is important to note that deadlock is different from starvation, where a thread may have to wait for a long time. For instance, consider a thread that repeatedly tries to lock a mutex; when it fails, it goes to sleep for a while and tries again. Now, assume this thread is very unlucky and it fails every time. This thread is not experiencing deadlock; it is experiencing starvation. The difference is that, with starvation, it is possible that the thread might get lucky in the future. With deadlock, there is no possibility.

Code Listing 7.18 provides a simple illustration of how deadlock

can happen. There are two threads, running the first() and second()

functions. The code for first() tries to acquire semaphore sem_a before

sem_b, while second() acquires them in the opposite order.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | /* Code Listing 7.18:

Code that is likely to lead to deadlock

*/

/* struct contains shared semaphores */

struct args {

sem_t sem_a;

sem_t sem_b;

};

void *

first (void * _args)

{

struct args *args = (struct args *) _args;

/* Acquire "sem_a" before "sem_b" */

sem_wait (&args->sem_a);

sem_wait (&args->sem_b);

/* other code here */

}

void *

second (void * _args)

{

struct args *args = (struct args *) _args;

/* Acquire "sem_b" before "sem_a" */

sem_wait (&args->sem_b);

sem_wait (&args->sem_a);

/* other code here */

}

|

The problem arises if there is a thread switch after one of the threads

successfully acquires the first semaphore it needs. That is, assume that

first() runs and acquires sem_a, then a thread switch occurs. At that

point, second() starts running and acquires sem_b. But second() gets

blocked when it tries to acquire sem_a, which is held by first(). At

that point, the system switches back to first(), which gets blocked trying

to acquire sem_b. At this point, both threads are waiting on each other

permanently.

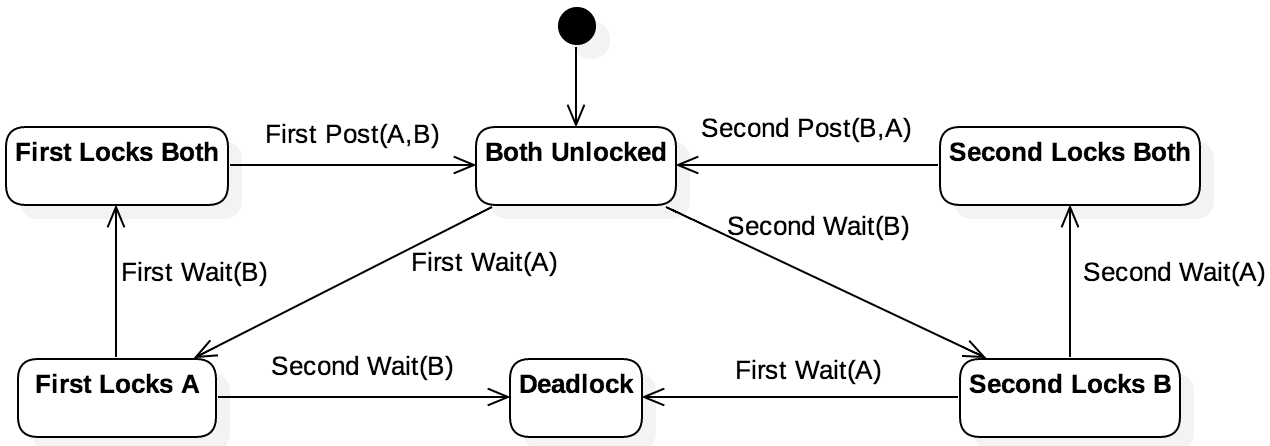

It is important to emphasize that code like that shown in Code Listing 7.18 does not guarantee that deadlock occurs. This fact becomes clear

when you consider Figure 7.7.1, which shows the state

model for this code. Once first() acquires sem_a, the emergence of

deadlock depends on second() waiting on sem_b before first() does.

If the order is different, the system could return back to the state in which

neither semaphore is acquired.

To make the description a little more concrete, imagine that you and a friend are sitting at a table to eat. There is one pair of chopsticks available; in order to eat, you need both chopsticks. When the food arrives, you both forget your manners and try to grab the chopsticks first. You manage to grab one while your friend got the other. You’re both so hungry that you refuse to let go of the chopstick you have. But neither of you can eat, because you only have one chopstick. And since neither of you will give up the one you have, you’re stuck.

7.7.1. Necessary Conditions¶

So what exactly causes deadlock? First, it’s important to note that deadlock is a race condition. You might write a program that runs millions of times over the span of years, only avoiding deadlock through lucky timing. As the code base gets larger and the system gets more complex, the possibility of deadlock arising gets even worse. For instance, you might have multiple threads that (without your awareness) use synchronization primitives through layers of encapsulated code. At some point, the timing of these primitives may line up precisely to cause deadlock.

There are four necessary conditions that can lead to deadlock. The first three conditions are generally unavoidable system characteristics. Deadlock could be made impossible by eliminating a single one of these characteristics.

- Mutual exclusion: Once a resource has been acquired up to its allowable capacity, no other thread is granted access.

- No preemption: Once a thread has acquired a resource, the resource cannot be forcibly taken away. For instance, only the owner of a mutex can unlock it.

- Hold and wait: It is possible that a thread can acquire one resource and retain ownership of that resource while waiting on another.

In modern, general-purpose computing, these three characteristics are de facto

requirements. Violating any of these characteristics would make concurrent

systems development significantly more difficult. For instance, by throwing out

mutual exclusion, pthread_mutex_lock() would not block a thread if the mutex

has already been acquired. By eliminating the no preemption characteristic, a

thread would repeatedly need to check if it still holds the mutex or if the

semaphore’s value is still acceptable.

Eliminating hold and wait would lead to devastating and undesirable performance. For instance, consider a database with millions of records, each of which can be locked independently. Once a transaction is ready to commit, the thread may acquire a single manager lock for the entire database. The reason for this split design is that manipulating the local record may require several operations and take a while, but they are independent and can happen in parallel. The final commit must happen one at a time, but it is very quick. Eliminating hold and wait would create the worst possible combination. Since both locks would have to be acquired and released at the same time, it would be impossible for the records to be manipulated in parallel. At the same time, the manager lock would have to be held for far too long while a single record is manipulated.

Ultimately, the three characteristics above are unavoidable and undesirable in modern systems. But it’s important to note that these three characteristics by themselves are not sufficient for deadlock. Deadlock is a race condition that arises by the fourth necessary condition.

- Circular wait: One thread needs a resource held by another, while this second thread needs a different resource held by the first.

Circular wait can be extended for more than two threads intuitively. Thread A needs something from thread B, which needs something from thread C, which is waiting on thread A. This chain of dependencies can be made arbitrarily long. Counterintuitively, circular wait can also arise even if there are multiple copies of a resource. This is a complex situation that arises in very advanced types of systems, though, and we refer interested readers to textbooks on OS design for more information.

Bug Warning

It is a common mistake to assume that deadlock only arises with certain types of synchronization primitives, such as mutex locks. This confusion arises because the term mutual exclusion is often used to mean that only one thread has access to a critical section. The term is used more broadly here, allowing for the possibility that there may be multiple concurrent accesses; however, those accesses are blocking out some others. Ultimately, deadlock can arise with the use of locks, semaphores, barriers, or condition variables.

7.7.2. Livelock and False Solutions¶

Livelock is a race condition that is similar to deadlock, though there is a slight difference. The key distinction with deadlock and livelock is whether or not the thread is changing state and executing anything. With deadlock, a thread is blocked and not executing any code; with livelock, the thread repeatedly changes state between blocked and unblocked, but accomplishes nothing.

As a simple illustration of livelock, consider Code Listing 7.19,

which attempts to fix the deadlock in the example above. In this code, after

successfully acquiring the first semaphore, the threads use sem_try_wait()

on the second. If the wait fails (because the semaphore is locked by the other

thread), the thread releases the semaphore it has first acquired and starts over.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | /* Code Listing 7.19:

Livelock occurs when the system is not deadlocked but no work is done

*/

void *

first (void * _args)

{

struct args *args = (struct args *) _args;

/* Acquire "sem_a" before trying to acquire "sem_b" */

while (1)

{

sem_wait (&args->sem_a);

/* Now try for the sem_b, breaking out if successful */

if (sem_try_wait (&args->sem_b))

break;

/* Failed to acquire sem_b, so start over */

sem_signal (&args->sem_a);

}

/* other code here */

}

void *

second (void * _args)

{

struct args *args = (struct args *) _args;

/* Acquire "sem_b" before trying to acquire "sem_a" */

while (1)

{

sem_wait (&args->sem_b);

/* Now try for the sem_a, breaking out if successful */

if (sem_try_wait (&args->sem_a))

break;

/* Failed to acquire sem_a, so start over */

sem_signal (&args->sem_b);

}

/* other code here */

}

|

This code makes deadlock impossible by voluntarily breaking the characteristic of hold and wait. Note that it works in this scenario because no work is done after the first semaphore is acquired. This approach is not generalizable, though, because most systems will perform work in between the two semaphore acquisitions; in many instances, that work performed cannot be undone.

While the code avoids deadlock, it still allows for the possibility of livelock. That is, it is possible that both threads repeatedly are successful when acquiring the first semaphore, leading to both failing at the second. Then both threads release the semaphore they hold and start over again. If this keeps happening, the threads are experiencing livelock. They are not in deadlock because there is the possibility that a good timing can lead to recovery. However, unlucky timing is causing the threads to repeatedly block each other, preventing the system from making progress.

In practice, this code sample is unlikely to experience livelock for a long time. Specifically, it is very improbable that a thread switch occurs at the exact same moment repeatedly. But this code structure is a very simple and special case. As such, this structure is not considered a reliable solution to avoiding deadlock.

7.7.3. Avoiding Deadlock¶

As three of the deadlock conditions (mutual exclusion, no preemption, hold and wait) are standard features in modern systems, the typical solution to the avoiding deadlock in software[1] is to avoid circular waiting. There are a variety of strategies that can be employed to avoid circular waiting that can be applied, depending on the needs of the system being built.

- Impose an ordering on resources. If one thread acquires

sem_aprior to acquiringsem_b, require other threads to follow this order.- Use timed or non-blocking variants that can provide immediate notification of failure. If a function like

pthread_mutex_trylock()orpthread_cond_timedwait()returns an error, release other held resources and try again later.- Limit the number of potential thread accesses. For instance, consider a scenario where there are five resource instances, and each thread needs two of them. Using a semaphore initialized to four would guarantee at least one thread will always have access to both the instances it needs; this strategy allows that thread to finish its work, eventually releasing both resource instances for the other threads to use.

- Employ higher-level synchronization primitives and strategies. In the next chapter, we describe some common well-known solutions that are known to be deadlock-free.

| [1] | Advanced systems software, such as OS kernels, use a variety of techniques for solving the deadlock problem. These include applying the Banker’s algorithm for deadlock avoidance or executing deadlock detection algorithms that can alert a system administrator. Each approach has its benefits and drawbacks, and there is no universally accepted solution. |