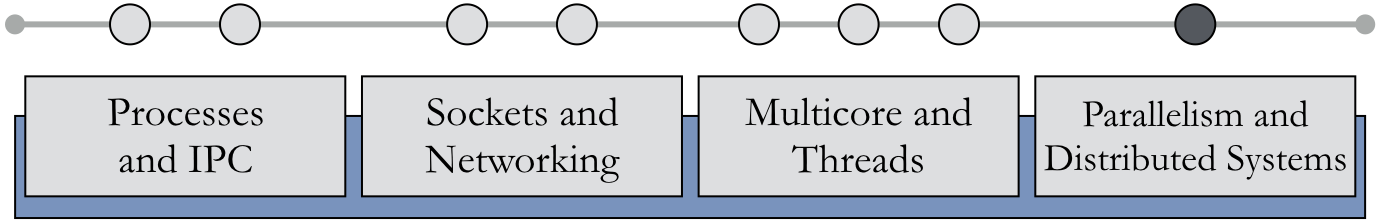

9.1. Parallel and Distributed Systems¶

“A distributed system is one in which the failure of a computer you didn’t even know existed can render your own computer unusable.”

As with multithreading, the general concepts of distributed computing are decades old. The Internet, for instance, can be characterized as a distributed computing system. Leslie Lamport introduced distributed timestamps in 1978. However, the past two decades have brought these techniques to new heights with massively parallel supercomputers (such as Cray Titan or IBM BlueGene) and grid computing (Berkeley Open Infrastructure for Network Computing (BOINC), Folding@home, Great Internet Mersenne Prime Search (GIMPS)). Furthermore, the domains of parallel and distributed computing remain key areas of computer science research. This chapter provides an introduction to key results and concepts in these areas, providing a foundation for interested readers to pursue more advanced study.

Chapter Objectives

In this chapter, we will address the following instructional objectives:

- We will distinguish the notions of concurrency and parallelism and examine the relationship of both techniques to the hardware capabilities.

- We will explore parallel design patterns that can be applied toward the construction of algorithms, program implementations, or program execution.

- We will examine the theoretical and practical limits to parallelism.

- We will consider three foundational concepts—timing of events, object location, and consensus—of distributed systems that have been very influential in this field.

The principles of concurrency and synchronization can apply to a wide variety of systems. In particular, throughout most of the history of computing, most systems implemented multiprogramming in the software (the operating system) to improve utilization on a processor that was capability of one task at a time. In recent years, there has been an increasing focus on and adoption of hardware capable of parallel execution units. The ability to exploit parallelism on modern hardware makes it possible to achieve significant gains in the speed of computing.

The benefits of parallelism, however, are not automatic and unlimited. Creating software that can take full advantage of hardware support for parallelism requires careful design and implementation. Some algorithms simply cannot be executed in parallel; for algorithms that can be parallelized, the code must be properly structured to account for the nondeterministic nature of the timing that can arise. Furthermore, even if the algorithm can be parallelized, there are theoretical and practical limits to how much the speed can be improved. In this chapter, we will explore these issues, strategies, and limits of parallelism.

In addition to parallelism on a single computer, we can link a larger collection of distributed systems of independent computers. The goal of distributed systems is to link many computers together, executing coordinated software to accomplish a single, larger goal. Distributed computing involves confronting the challenge of unexpected and intermittent failures of nodes in the system. As an introduction to this area of computing, we will explore how computer scientists have addressed three well-known challenges in distributed computing: communicating the timing of events, achieving an agreement — known as consensus — on the state of the system, and storing data so that it can still be accessed when nodes fail. Readers who find these topics of interest should explore the recommended readings at the end of the chapter.