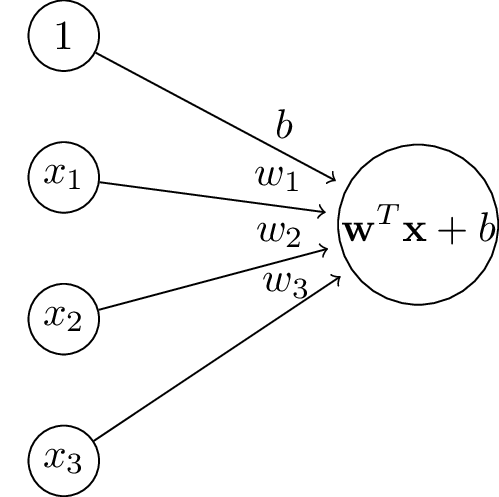

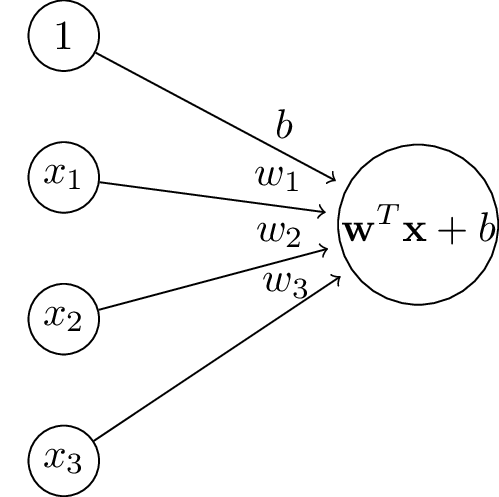

Review: Linear Regression

The goal was to find weight values to minimize MSE on some training data: fitting a hyperplane.

The goal was to find weight values to minimize MSE on some training data: fitting a hyperplane.

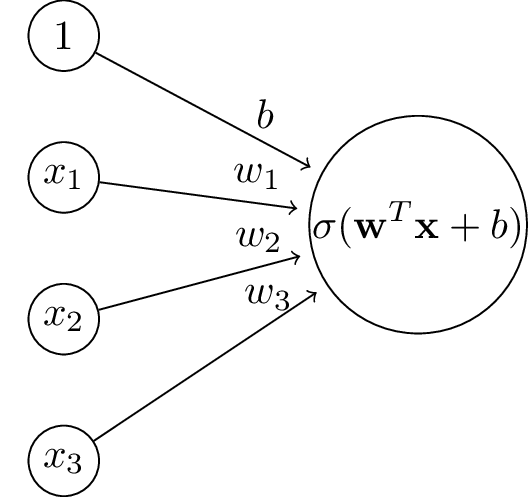

This is the logistic or sigmoid function: \[\sigma(z) = \frac{e^z}{1 + e^{z}} = \frac{1}{1 + e^{-z}}\]

It looks like this:

After applying a sigmoid non-linearity we can interpret the output as a probability.

The logistic function has a simple derivative:

\[\sigma'(x) = \sigma(x)(1 - \sigma(x))\]

Goal is to learn the parameters \(\theta\) of a probabilistic model.

One option is to attempt to find the single most probable set of parameters given our data and our prior:

\[\theta_{MAP} = \text{argmax}_\theta P(\mathcal{D} \mid \theta) P(\theta)\]

Laplace smoothing for naive Bayes can be seen as an example of this!

An even simpler alternative is to just pick the parameters that make the data most probable: \[\theta_{ML} = \text{argmax}_\theta P(\mathcal{D} \mid \theta)\]

\(P(\mathcal{D} \mid \theta)\) is commonly referred to as likelihood: \[\mathcal{L}(\theta) = P(\mathcal{D} \mid \theta) = \prod_{\mathbf{x}_i \in \mathcal{D}} P(\mathbf{x}_i \mid \theta)\] (The likelihood isn’t really a probability distribution… We know what the data is, so the probability of the data is 1. It is a measure of how probable (likely) that data is given our model parameters.)

Simple example… Let’s say \(\theta\) represents the probabilility that a rigged coin will come up heads: \(\theta = .9\). What is the likelihood of observing the following outcomes? \[[Heads,\; Tails,\; Heads]\]

What value of \(\theta\) would maximize the likelihood for this data set?

Numerically, we are usually better off working with the log likelihood: \[\mathcal{LL}(\theta) = \sum_{\mathbf{x}_i \in \mathcal{D}} \log P(\mathbf{x}_i \mid \theta)\]

We want a likelihood function for our binary classifier. It could look like this: \[\mathcal{L}(\mathbf{w}) = \prod_{i=1}^n \begin{cases} P(y = 1 \mid \mathbf{x_i}, \mathbf{w}), \text{if } y_i = 1 \\ P(y = 0 \mid \mathbf{x_i}, \mathbf{w}), \text{if } y_i = 0 \\ \end{cases} \]

This is much nicer to work with: \[\mathcal{L}(\mathbf{w}) = \prod_{i=1}^n P(y = 1 \mid \mathbf{x_i}, \mathbf{w})^{y_i} \times P(y = 0 \mid \mathbf{x_i}, \mathbf{w})^{1-y_i} \] (remember… \(a^1 = a, a^0 = 1\))

This makes the log likelihood: \[\mathcal{LL}(\mathbf{w}) = \sum_{i=1}^n y_i \log P(y = 1 \mid \mathbf{x_i}, \mathbf{w}) + (1 - y_i) \log P(y = 0 \mid \mathbf{x_i}, \mathbf{w}) \] (remember… \(\log_b(x^y) = y\log_b(x)\))

The goal is to maximize the log likelihood, which is the same as minimizing the negative log likelihood: \[-\mathcal{LL}(\mathbf{w}) = -\sum_{i=1}^n y_i \log P(y = 1 \mid \mathbf{x_i}, \mathbf{w}) + (1 - y_i) \log P(y = 0 \mid \mathbf{x_i}, \mathbf{w}) \]

This loss function is usually called cross-entropy. \[-\mathcal{LL}(\mathbf{w}) = -\sum_{i=1}^n y_i \log(\sigma( \mathbf{w}^T\mathbf{x} + b )) + (1 - y_i) \log (1 - \sigma( \mathbf{w}^T\mathbf{x} + b )) \]

Quiz: What is the cross-entropy loss for \(\sigma( \mathbf{w}^T\mathbf{x} + b ) = 1\) and \(y_i = 0\)? \(y_i = 1\)?

Remember… \(\log(1) = 0, ~~~ \log(0) = -\infty\)

Zero and one are numbers, so there is no reason we couldn’t use the loss function we used for linear regression: \[E(\mathbf{w}) = \sum_{i=1}^n (y_i - \sigma(\mathbf{w}^T\mathbf{x} + b))^2\]

Here is how the two loss functions compare: