Evaluation Metrics

- Confusion matrix tells the whole story BUT, we often want a single number to summarize our performance.

- Accuracy

- Sensitive to skew, doesn't distinguish between types of error:

- Type I Error: False Positive

- Type II Error: False Negative

- Sensitive to skew, doesn't distinguish between types of error:

- True Positive Rate (TPR)/Recall = Fraction of positives that are classified as positives =

- False Positive Rate (FPR) = Fraction of negatives that are classified as positives =

- These distinguish error type, but don't help with skew

- Precision = Correct predictions over the total number of positive predictions =

- Meaningful even for highly skewed data, since it only considers positive predictions.

- There are also more complicated measures that attempt to combine strengths of those above. E.g.:

- F1 Score =

- Both precision and recall must be high for the F1 score to be high.

- F1 Score =

- Accuracy

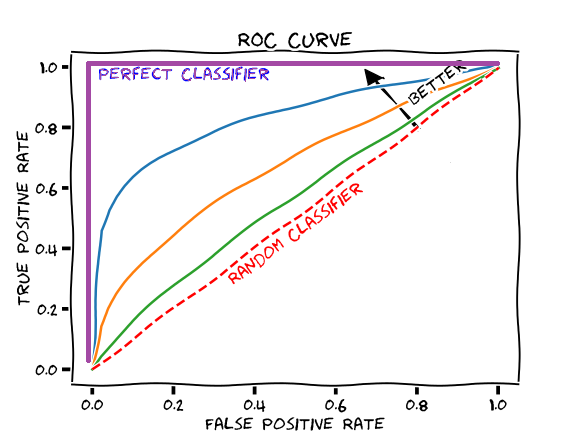

Classifiers Scores + ROC Curves + AUC

- Many classifiers are able to produce a score

- This allows us to adjust our classifier's behavior by changing the classification threshold.

- Receiver Operating Characteristic (ROC) curve shows the impact of different threshold values:

(Image credit: https://glassboxmedicine.com/2019/02/23/measuring-performance-auc-auroc/ - The Area Under the Curve (AUC) provides a single summary value (generally between .5 and 1.0) of the behavior across all possible thresholds

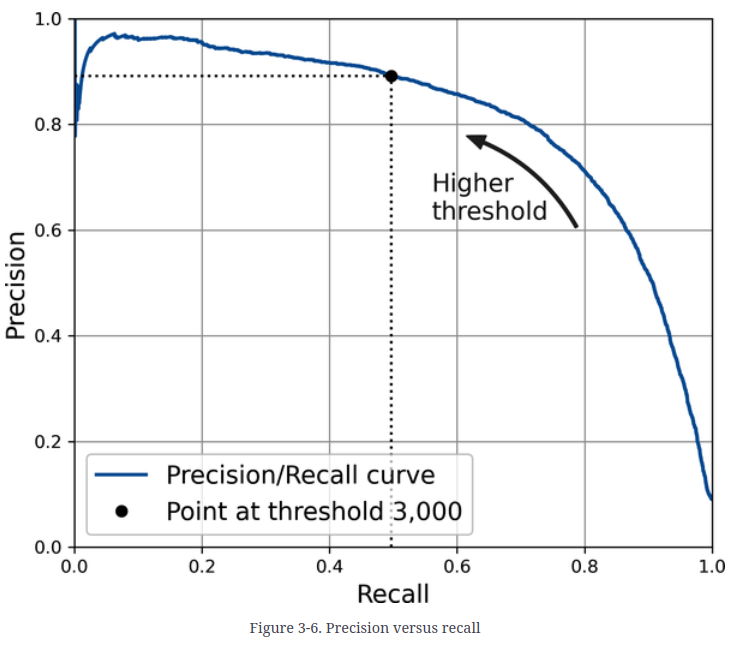

Precision Recall Curve

(Image credit: Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow, 3rd Edition, Aurélien Géron, O'Reilly, 2022.)

Quiz: Is the test set used to generate this image skewed?