Programming Assignment #1: N-Gram Warm-Up

Goals

The purpose of this programming assignment is to help you get up-to-speed with the Python programming language. This PA will also give you an opportunity to experiment with some simple algorithms for statistics-based natural language processing.

Part 1: Warm-up

Get started by completing the online tutorials in http://www.learnpython.org. You should complete all of the tutorials in the "Learn the Basics Section" with the exception of "Classes and Objects".

Next, read the Object Oriented Features tutorial from Crash into Python.

Once you have completed the tutorials above, log into CodingBat. After you log in, follow the "prefs" link to enter your name and my email address (spragunr@jmu.edu) in the "Teacher Share" box. You must complete at least two exercises from each of the non-warmup categories (at least 12 in all).

Feel free to skim the tutorials above if you are already comfortable programming in Python. If you are new to Python, I strongly encourage you to work through each step of the tutorials carefully. It will probably take a couple of hours, but the time spent will pay dividends throughout the semester.

Part 2: Bigrams and Trigrams

Natural language processing (NLP) is the sub-field of AI that involves understanding and generating human language. It is a big area, and we won't spend much time on it this semester. The goal for this PA is to take a tiny step into NLP by using n-grams for the purpose of generating random text in the style of particular authors or documents. Take a minute to read over the Wikipedia pages on n-grams and bigrams:

Your objective for this part of the PA is to complete the four unfinished functions in text_gen.py so that they correspond to the provided docstrings. You can use the unit tests in text_gen_tests.py to help test your code.

A text generation method based on unigrams is already implemented.

You can test this by copying the file huck.txt* into the same folder

as text_gen.py and executing the code. You should see output like the following:

tell shot up finn man if unloads on wonderful go know swear s myself no to good in and no home times a pick inside janeero s warn misunderstood sometimes sweat wouldn sakes and i away didn i as next furnish two the it put his dick take scared nor i on said we a was i blankets up poor bull him and asked what mary old and you that night en and comfortable all from and re it running we a lonesome but bible he up hitched a t a i telling says yarter hot call can if then

Text generated using bigrams and trigrams should look significantly more English-like.

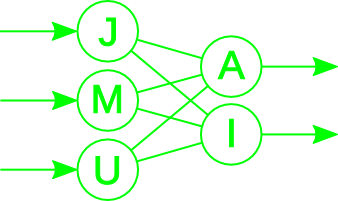

Note that the code in text_gen.py uses the terms "bigram" and "trigram" in slightly non-standard ways. Typically, bigrams encode the probability of particular word pairs. For example, bigrams could tell us that the probability of the sequence ("pineapple", "extrapolate") is relatively low, while the probability of the sequence ("going", "home") is relatively high. Since we are interested in generating text, it is more useful for us to store the probability that one word will follow another: the probability of observing "home" after "going" is much higher than the probability of observing "cup" after "going". It turns out that the two representations are interchangeable, as described on the Wikipedia bigram page.

Submission and Rubric

You should submit the completed version of

and text_gen.py through Canvas by the deadline.

This project will be graded on the following scale:

| CodingBat exercises | 20% |

| Functionality of bigram functions in text_gen.py | 70% |

| Functionality of trigram functions in text_gen.py | 10% |

Keep in mind that testing does not prove the correctness of code. It is possible to write incorrect code that passes the provided unit tests. As always, it is your responsibility to ensure that your code functions correctly.

* This is the complete text of the Adventures of Huckleberry Finn by Mark Twain. Obtained from Project Gutenburg.