|

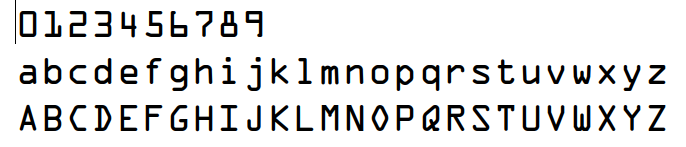

Image Analysis

An Introduction |

|

Prof. David Bernstein

|

| Computer Science Department |

| bernstdh@jmu.edu |

|

Image Analysis

An Introduction |

|

Prof. David Bernstein

|

| Computer Science Department |

| bernstdh@jmu.edu |